The HQX filters, namely hq2x, hq3x and hq4x, are well-known pixel art scalers. They are mainly used by old console (such as NES) emulators to provide a smooth rendering of the games. It was developed around 2003 by Maxim Stepin.

There are better pixel-art magnification algorithm nowadays, like xBR to name one. Microsoft also did some research to produce another a scaler. Still, HQX is a stable reference, and was requested on FFmpeg bug tracker. I decided to have a look into it and see if we could implement it. After all, we already have Super2xSaI, and people seems to like the idea of encoding pretty-scaled record of their old school video game sessions.

Unfortunately, the reference code is more than 12.000 lines of unrolled C code. This code is basically duplicated without a thought in every single project. And still, no one seems to have a clue about the algorithm. I didn't want to reproduce that mistake in FFmpeg.

Common knowledge about the filters

Let's begin with the common public knowledge about these filters. There are 3 filters with a similar algorithm: hq2x, hq3x and hq4x, which respectively scale by 2, 3 and 4 the input image. There is no temporal interpolation, it's just a 1-to-1 frame scaling.

All 3 algorithms use a 3x3 area around the current pixel to determine the interpolation to use. So for each pixel, a 8-bit pattern is build: for every of the 8 surrounding pixels, it checks if there is a difference with the current pixel (in the center). This difference is defined using YUV thresholds: 48, 7 and 6 for luminance, chroma blue and chroma red: if one difference in one of the plane is above the threshold the bit will be 1 in the pattern, otherwise 0.

Then comes the unrolled code. Basically, it's a thousands lines switch case for all the 256 cases of the 8-bit patterns. Each case has code to fill a 2x2 (hq2x), 3x3 (hq3x) or 4x4 (hq4x) block, using various interpolations methods. There are 10 interpolations per pixel possible, each of them actually being a 2 or 3 pixels interpolation with different factors.

And that's all we know about the algorithm itself.

First attempts

I was naive at first and thought it would be possible to reduce the number of lines by making use of the C preprocessor. After all, the code is pretty redundant. It was a huge a mistake. I wasted a considerable amount of time on this. I might have saved something like 3000 or 4000 lines, but I hit several walls in the process. Typically, in some pattern cases, there are extra difference conditions, which are not stored into the pattern (the switch case would have been even larger...), which complicated things.

So I tried to actually understand the algorithm, manually. Like, you know, with pen & paper. I did that for a few patterns in order to get a clue, but it was an extremely slow process and it actually didn't help me much.

Visual representation of hq2x

So we have hq2x, the simplest of the 3 filters. We know that for 1 pixel, 2x2 will be produced. We can safely assume that the algorithm for one pixel is the same as the 3 others with some symmetry.

There are 256 patterns for 10 interpolations methods. So one way of representing this is to check what are all the patterns that triggers each interpolation.

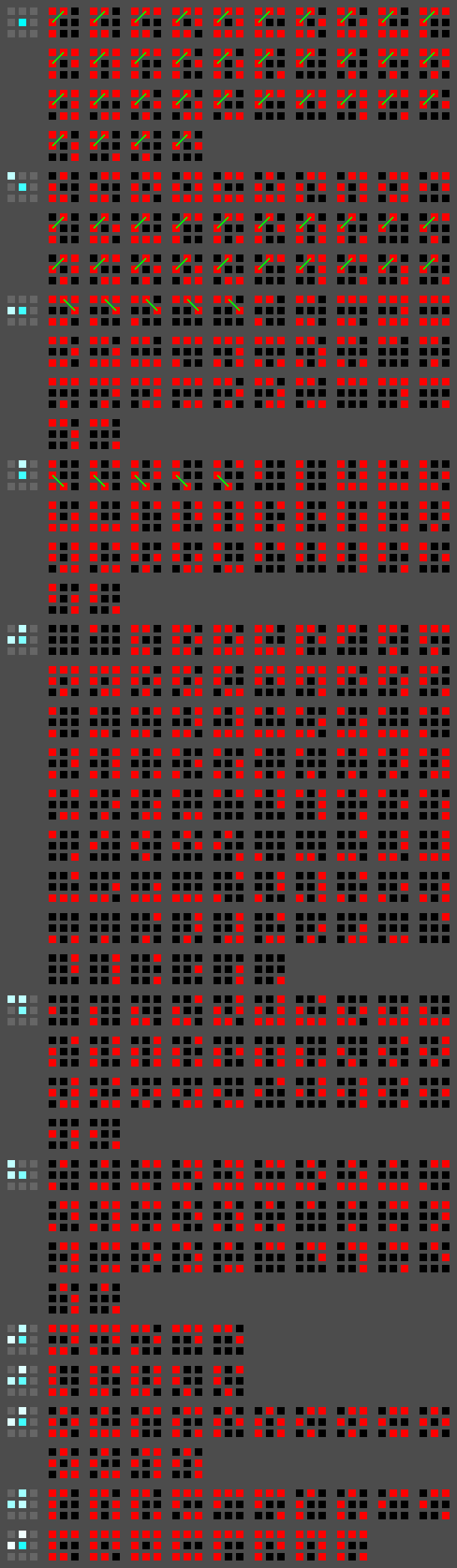

So I wrote some python code to extract all the information I needed from the reference code, and used Cairo to represent this:

On the left (the 3x3 blocks with the blue gradients) is the chosen interpolation when one of the combination on the right is matched. More blue means a more important coefficient, more white means a less important coefficient. Center pixel is the current one.

On the right is the list of all the different combinations triggering that interpolation:

- When a pixel is red it means there must be a visual difference with the current pixel (center).

- When a pixel is black it means there must be no visual difference with the current pixel. The center pixel is also in black to keep in mind that it's close to the other black pixels.

- When present, the green line links 2 pixels which must have a visual difference (in addition to all the other visual diff conditions represented in red).

These green lines correspond to the extra if checks we find in some of the

pattern cases in the original code. Warning here: we are displaying only the

true case of the if, which means the else case is not displayed. As

a consequence, they must be treated before any pattern with no condition.

In various cases we can observe a common pattern in the combinations. For example for the first interpolation (which actually isn't, we just pick the current pixel), we can distinguish the same pattern with some "optional" differences.

Unfortunately, factorizing the combinations is not as easy as it sounds. In order to define if a pixel value is affecting the chosen interpolation, I used a very stupid and slow algorithm which can be summarized with the following pseudocode:

For a given interpolation:

Compare every combination with each other:

Define a difference pattern between the two

Generate all combinations that need to be met in the subset to assume that they are optional

If all the combinations can be found, add the pattern to a list

Keep the best patterns (basically remove those who are a subset of another)

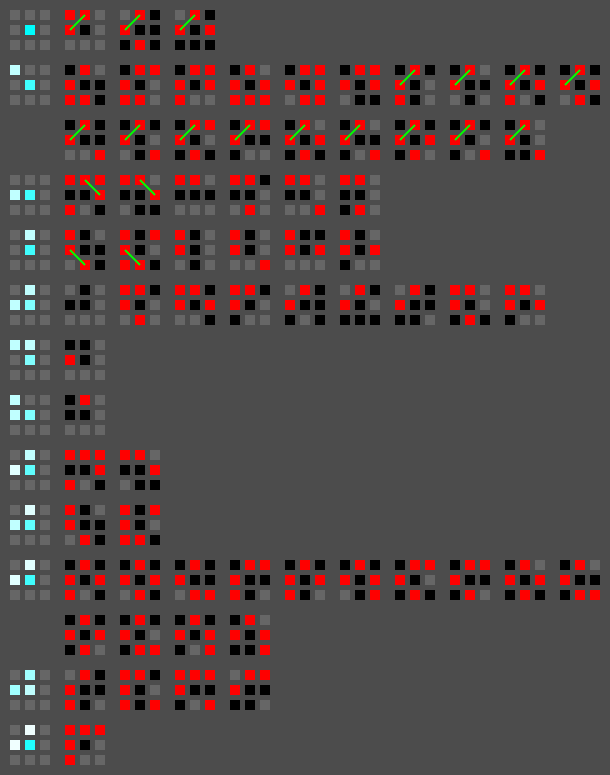

And so this came out:

The semantic is the same as the previous picture, except that now grays pixels represent pixels for which the value doesn't matter.

Getting code out of it

This latest picture can be translated back into code easily now. We just have to respect two things:

- Make sure the conditions with a diff inside (green stroke) are treated first,

- Deal with simple cases first so a maximum of interpolations can be covered

with a minimum of

if. It also allows to have a finalelsebranch which doesn't need to cover the interpolation with most conditions.

You get this:

if ((P(0xbf,0x37) || P(0xdb,0x13)) && WDIFF(w1, w5))

return interp_2px(w4, 3, w3, 1, 2);

if ((P(0xdb,0x49) || P(0xef,0x6d)) && WDIFF(w7, w3))

return interp_2px(w4, 3, w1, 1, 2);

if ((P(0x0b,0x0b) || P(0xfe,0x4a) || P(0xfe,0x1a)) && WDIFF(w3, w1))

return w4;

if ((P(0x6f,0x2a) || P(0x5b,0x0a) || P(0xbf,0x3a) || P(0xdf,0x5a) ||

P(0x9f,0x8a) || P(0xcf,0x8a) || P(0xef,0x4e) || P(0x3f,0x0e) ||

P(0xfb,0x5a) || P(0xbb,0x8a) || P(0x7f,0x5a) || P(0xaf,0x8a) ||

P(0xeb,0x8a)) && WDIFF(w3, w1))

return interp_2px(w4, 3, w0, 1, 2);

if (P(0x0b,0x08))

return interp_3px(w4, 2, w0, 1, w1, 1, 2);

if (P(0x0b,0x02))

return interp_3px(w4, 2, w0, 1, w3, 1, 2);

if (P(0x2f,0x2f))

return interp_3px(w4, 14, w3, 1, w1, 1, 4);

if (P(0xbf,0x37) || P(0xdb,0x13))

return interp_3px(w4, 5, w1, 2, w3, 1, 3);

if (P(0xdb,0x49) || P(0xef,0x6d))

return interp_3px(w4, 5, w3, 2, w1, 1, 3);

if (P(0x1b,0x03) || P(0x4f,0x43) || P(0x8b,0x83) || P(0x6b,0x43))

return interp_2px(w4, 3, w3, 1, 2);

if (P(0x4b,0x09) || P(0x8b,0x89) || P(0x1f,0x19) || P(0x3b,0x19))

return interp_2px(w4, 3, w1, 1, 2);

if (P(0x7e,0x2a) || P(0xef,0xab) || P(0xbf,0x8f) || P(0x7e,0x0e))

return interp_3px(w4, 2, w3, 3, w1, 3, 3);

if (P(0xfb,0x6a) || P(0x6f,0x6e) || P(0x3f,0x3e) || P(0xfb,0xfa) ||

P(0xdf,0xde) || P(0xdf,0x1e))

return interp_2px(w4, 3, w0, 1, 2);

if (P(0x0a,0x00) || P(0x4f,0x4b) || P(0x9f,0x1b) || P(0x2f,0x0b) ||

P(0xbe,0x0a) || P(0xee,0x0a) || P(0x7e,0x0a) || P(0xeb,0x4b) ||

P(0x3b,0x1b))

return interp_3px(w4, 2, w3, 1, w1, 1, 2);

return interp_3px(w4, 6, w3, 1, w1, 1, 3);

The P() macro checks if the pattern is matched. The first operand filters

the pixels differences that matter, and the second operand is the expected

result. WDIFF() allows to check for an extra difference. The

interp_[23]px() functions are the interpolation functions with the values

and their coefficients.

Using some macro magic, you can then easily get the version of this function for the 3 other pixels.

And using the same method, you can obtain the code to generate a function for 2x1 pixels for hq3x, and 2x2 pixels for hq4x.

Final code

The complete and final hqx code for all the filters can be found in FFmpeg.

The information extraction, picture debug, and code generator can be found in

my hqx GitHub repository. Use make hq2x.png to get the

picture, and make code2 to generate the code above.

At the moment of writing the post, libavfilter/vf_hqx.c is 560 lines of

code. As a matter of comparison, the code from the (modern) reference

implementation is about 12000 lines of code:

% wc -l hqx-read-only/src/*.[ch]

141 hqx-read-only/src/common.h

2809 hqx-read-only/src/hq2x.c

3787 hqx-read-only/src/hq3x.c

5233 hqx-read-only/src/hq4x.c

138 hqx-read-only/src/hqx.c

55 hqx-read-only/src/hqx.h

38 hqx-read-only/src/init.c

12201 total

So basically, the code in FFmpeg is more than 21 times smaller. It's possible the reference code is faster, but it's a pain to bench and I don't feel it's important: people are going to use this to scale GameBoy like resolution game (160x144 pixels). That said, I'm still curious, so feel free to do the benchmark and tell the world.

Anyway, the code doesn't look really slow. With the help of threading, I'm at 110 FPS on a 320x240 input with hq4x on my desktop (an old first generation i7). I think that's good enough for now.

Next

Now I believe the code could be simplified even more. If you look at the

picture for hq2x, you'll notice that there is a horizontal/vertical symmetry

somehow. The representation also doesn't show the else case for the diff,

which could help somehow giving a more meaningful code. We can probably guess

the coefficients for a single interpolation in a more logical way instead of

doing some pattern matching.

If you are interested in working on this, feel free to discuss it with me. I don't plan to work on the filter again, but I'd certainly be happy to hear about further research on it.

Ah, and before you ask: no, I didn't contact Maxim Stepin for information on his algorithm, I wanted to have some fun, and it would have been cheating ;)